August 20, 2024

Biamp’s journey to AI- and ML-powered insight

Precog

The following conversation is with Mark Oakley, Enterprise Application engineer at Biamp, Chris Griffith, CEO, of StarPoint Technologies, and Mike Corbisiero, Co-Founder and COO of Precog.

Biamp is a leading provider of innovative, networked media systems that power the world’s most sophisticated audiovisual installations. The company is recognized worldwide for delivering high-quality products and backing each one with a commitment to exceptional customer service. Founded in 1976, Biamp is headquartered in Beaverton, Oregon, USA, with additional offices around the globe. For more information on Biamp, please visit www.biamp.com.

StarPoint Technologies is an award-winning data engineering firm that helps companies maximize performance and streamline operations through data-driven initiatives that leverage vital data streams, analytics, and AI/ML.

Improving Supply Chain Management with ML and AI

Mike Corbisiero (Precog)

Can you share some of the insights you’ve gained as you’ve worked to improve Biamp’s supply chain management through advanced analytical machine learning and AI?

Mark Oakley (Biamp)

We curate data through published data models — currently NYX, soon Infor LN, Infor’s ERP platform. Our methodology is to understand, absorb, and then curate these data models, which we then publish into a data warehouse for insights and tactical analysis. Right now, we’re using Infor AI algorithms to generate sales insights, but in the future, we’ll expand to other areas of the business. We’re trying to surface opportunities by identifying customers based on characteristics — which customers are solid, which are slipping, and which ones have potential upsells. We’re trying to not only increase sales effectiveness but also to provide a 360-degree understanding of the customer. We’re still flushing a lot of that out, but I can see us forming a Copilot-type tool for our CRM.

ERP Sources — ”We Look At Everything”

Mike Corbisiero (Precog)

What sources of data are you working with?

Mark Oakley (Biamp)

We’ve got a CRM, which is Microsoft Dynamics 365. We have a lot of data coming through the pipeline there. We’ve got a product profile representing all the products that a particular customer has. We also look at training. Then we take a look at support history and calls, and troubles that they might be going through. All of this is going to be done through our CRM, but a lot of stuff comes out of AX. We take a look at everything from where we are shipping these things, to where we build them. We want to know where products end up regionally so we can take a look at the numbers demographically.

It’s been going on for about six months, and we’re still in the piloting phase. Some aspects of it are ready to move into production… but there is a kind of silent, quiet AI project going on behind the scenes that’s building steam. We’re starting to get competencies in certain areas. We’re starting to get confidence with some of the data points, especially as it pertains to customers.

From Infor LN from Microsoft AX

Mike Corbisiero (Precog)

Can you clarify what LN is?

Mark Oakley (Biamp)

That’s our new Infor ERP system that we’re moving to from Microsoft Dynamics AX. And the Infor platform has an AI module called Infor AI. And we take our curated data sets that are procured from several different data sources and put together in one big story.

Mike Corbisiero (Precog)

Can you summarize the motivation for migrating to Infor LN?

Mark Oakley (Biamp)

The impetus behind the LN migration is to future-proof our migration strategies and our acquisition strategies. Right now, we’re a multisite, 24-hour operation. We want to be able to bring everything all under one roof and basically integrate multiple sites together, but also to future-proof our acquisition strategies. We want a platform that makes data migration a little bit easier than what we had with the AX platform.

The Point of Partnerships — StarPoint Connects the Dots

Mike Corbisiero (Precog)

How has StarPoint Technologies helped Biamp?

Mark Oakley (Biamp)

StarPoint Technologies is one of the value-added partners Infor brought in. They helped us understand the data lake and data objects as they pertain to LN and reporting.

Chris Griffith (StarPoint Technologies)

Infor brought Inavista in for the LN migration, and brought us in for the analytics side. One of the challenges that I think Biamp has is that Infor OS is not something they created — it’s something Infor created. Infor doesn’t provide native ETL tools for Infor OS platform — and that’s the fundamental value proposition Precog brings because Biamp has an analytic, reporting, and AI strategy that stretches beyond the Infor ecosystem.

Precog’s Ability to Bridge the Gap

Mike Corbisiero (Precog)

How exactly is Precog delivering value?

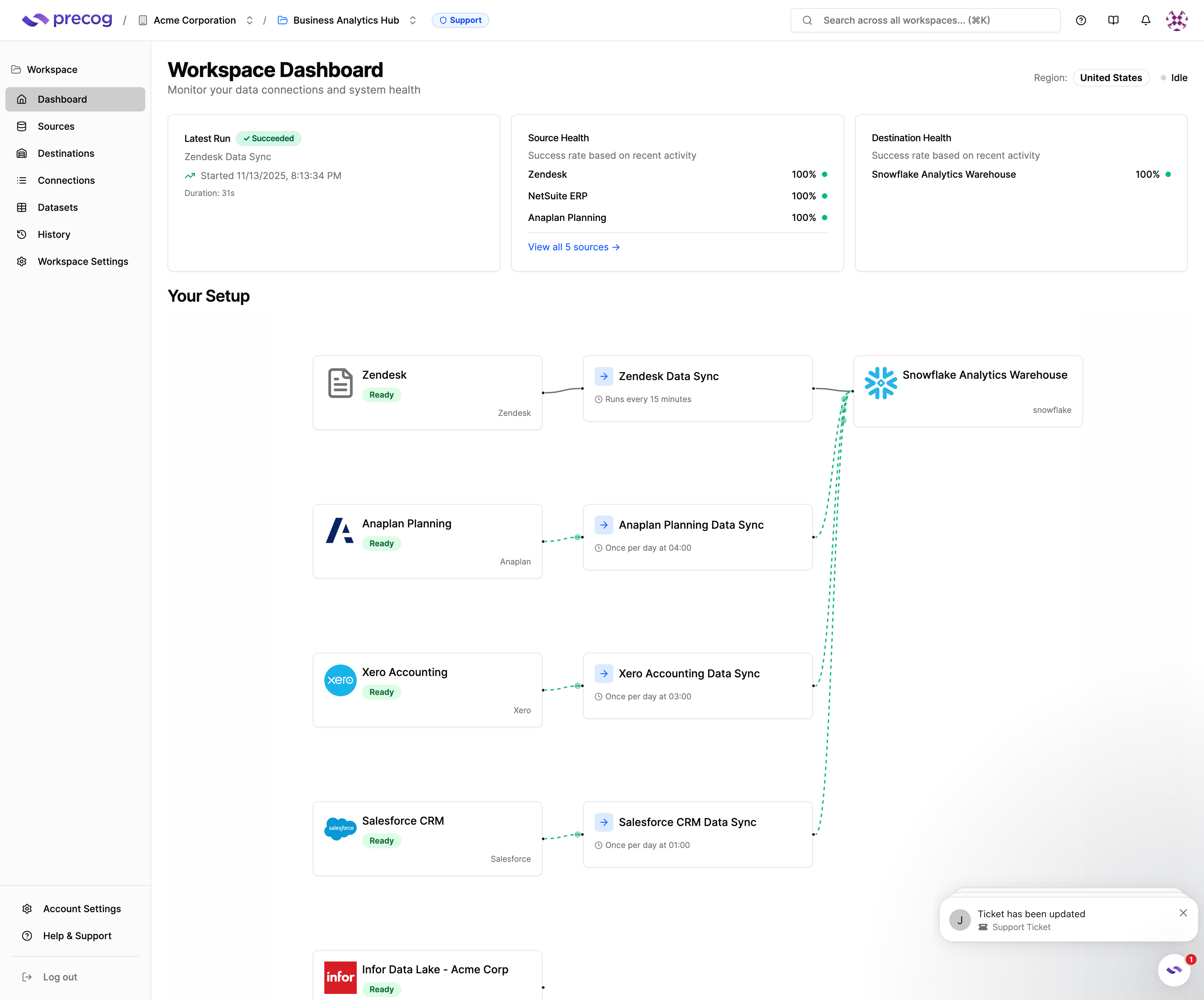

Chris Griffith (StarPoint Technologies)

Precog is a pre-integrated, automated tool for helping Biamp move data out of the Infor ecosystem to get it better aligned with their data centers and our analytic and reporting strategy that’s more over in the Microsoft world. In our experience in working with Infor over the past seven years, when a customer isn’t going to fully standardize on Infor, Precog’s a necessary tool for for loading data.

Mike Corbisiero (Precog)

Mark, you talked about support history, products, destinations, all the dynamics of the sales cycles — is that what’s being loaded?

Mark Oakley (Biamp)

And more. Yes, it does all of our production work orders and product lifecycle management — our price quotes, it runs our shop floors and our production runs — everything end-to-end, cradle to grave when it comes to selling the product, building the product, shipping the product and invoicing the product. All of that will be within our LN platform and those workflows. And to Chris’s point, there’s not an efficient way of getting that data. So, if we want to maximize continuous improvement and look for opportunities that may have inefficiencies within the systems that are not natively built within the LN system, you really have to take that data and do something about it. And since we’ve standardized on Power BI, and we’re going to be licensing and rolling with Copilot and some other Microsoft technologies, that’s why the value behind what we do is pull that data out so then we can understand that data, use it to our internal capacities and skills so we don’t have to hire out a bunch of contractors to do something so fundamental to the business.

AI-Powered BI Using Infor AI

Mike Corbisiero (Precog)

Is Infor AI technology based on SQL Server, on the data coming into the Infor Data Lake, or both?

Mark Oakley (Biamp)

Into the data lake—they publish their models and all the results into the data lake, and we replicate those locally. Then, we throw Power BI on top of that.

Chris Griffith (StarPoint Technologies)

Mark and his team have to feed some of their external data into the data lake, because Infor AI requires it to be in the data lake in order to pick it up. So he’s talking about the training, and the outputs from the algorithms are also put into data lake. And, Mark, correct me if I’m wrong, but you’re probably planning on using Precog to pull those outputs as well?

Mark Oakley (Biamp)

We are.

Chris Griffith (StarPoint Technologies)

So those outputs are again dropped off into the data lake just like all the broad transactional data is for LN, and Precog will pick those up. And I think that’s an important distinction because that’s Precog’s role in what I would call the AI/ML side of things. If we think about how Precog is aligning to these different workloads and use cases that Biamp has — it’s capturing the AI/ML outputs from Infor AI and bringing those over so that Mark and his team can align those to some of the analytics and reporting. And then there’s the bringing of the raw transactional data into their SQL Server/data warehouse so that they can then use that for their reporting, analytics and gen AI strategies.

Mark Oakley (Biamp)

We do take data out of SQL Server and feed it back to the data lake, so no matter what, Coleman AI usually sits right on top of the data lake, not only for consumption but for publication as well. AI and ML outputs return to our SQL Server, so that’s another dotted line… And that’s all part of our ins and outs, if you will, because anything that goes into the data lake to any capacity, whether it’s from the ERP or LN-related or integrations with Ephesoft or Esker (OCR software)… we’ve got learning and training environments that we are integrating with so that we can tell when a customer has been trained on things.

Predictive Analytics with Self-serve Capabilities

Mike Corbisiero (Precog)

So the story here? Can you summarize the transformation in your words?

Mark Oakley (Biamp)

The goal is predictive analytics with self-serve capabilities. That’s one of my pitches to management when I’m talking about these capabilities… because eventually if you want to get into predictive, if you want to leverage ML and AI, you’re going to have to have high-quality, reliable data structures. That’s where we’re headed.

Moving to the Cloud Opened Doors To…

Mike Corbisiero (Precog)

What were some of the reasons why you chose to go with Infor?

Mark Oakley (Biamp)

So, that decision was made a little bit before my time, but I think the value proposition I had was the most favorable to the management executive team at the time. And so once they saw the capabilities and the scalability, the API, native functionalities and capabilities, they went ahead and started down that road… but the opportunity as I jumped on board I saw was we’re coming to a hosted environment, we have data that’s no longer going to be on-premise, you’re not gonna be able to touch it. So, a lot of the BI and the data warehouse and capabilities that we’ve built internally were not going to be applicable.

Maintaining Best-in-Class BI Tools for Best-in-Class Teams

Mark Oakley (Biamp)

So that’s what we’re going through to be able to help support and keep our BI competencies viable. We’re going to have to come up with a solution for it, and this is what we came up with to ensure that we have end-to-end integrations with the team and the business, which are able to either launch their own reports or create their own reports. And since we’ve built up our competencies around Power BI, training becomes less of an issue. We’re still going to use Birst, Infor’s native BI tool, but there are just some power users who don’t want to get into LN but prefer Power BI. We want to maintain those conversations so they can continue to process without any disruption to the current process. I’ve supported sales teams across several industries for quite a few years, and I have to say that Biamp has the best BI adoption of any organization I’ve been in. So, I want to make sure I continue to support that synergy.

Where did StarPoint Technologies come from?

Mike Corbisiero (Precog)

Chris, can you discuss where StarPoint Technologies adds value or contributes to the vision?

Chris Griffith (StarPoint Technologies)

For several reasons, Infor felt like we were a good fit to work with Biamp. Number one, we’re an analytics-only partner, and so we’ve got experience within the Infor ecosystem, but specifically experience working both with Infor’s BI product and then also with the Microsoft product as well. We’re adept at being able to bridge those two worlds. I think one of the things that are critical for Biamp is that they continue to enable the sales teams that are already developed and entrenched and trained on the Power BI side of things to take advantage of those newer technologies yet maximize some of the value that Birst is going to bring. It’s been more of that architecture and advisory role that we’ve played.

BI 2.0? Speed and Quality of Execution, Time-to-Value

Chris Griffith (StarPoint Technologies)

If you sit in any meeting at Biamp, there’s one thing that you’re going to understand and it’s that these guys are focused on speed and quality of execution. I think that’s an area where StarPoint Technologies helps. I think that’s one reason to come back to one of your earlier questions, Mike: one of the critical reasons that Biamp chose Infor was that it offered a lot of capability right out of the box and was industry-specific in that manufacturing vertical. And so the time-to-value, the speed of execution, the capability that Infor offered right out of the box, is one of the critical reasons that Biamp chose Infor. But that doesn’t mean that Infor makes things easy at every turn. This example that we’ve talked about — these additional workloads that Mark’s team has outside of the Infor ecosystem — is where Precog comes in and plays a critical role in helping facilitate some additional technical capability and automation so that the challenge of pulling data out of that Infor ecosystem is something that they no longer have to worry about.

Mark Oakley (Biamp)

Precog is a significant time saver as well — not having to write all those APIs, and to kind of go back and reinforce Chris’s value add, it’s like by pulling them in and helping us understand the relationships and the competencies that they have internally within Infor LN that will reduce our time to deliver, you know, by at least 30%, if not 50% in some cases. So as we go through these deliverables, and we’re trying to match the requirements, StarPoint Technologies comes in helping us understand those schemas, being able to publish it right out of our diagramming and figure out what those relationships look like so we don’t have to spend weeks and weeks trying to figure out what these relationships are.

Easy ROI: Five Days to Five Hours

Mark Corbisiero

What has the ROI been on using Precog?

Mark Oakley (Biamp)

I’ll give you a use case, and then we can run some numbers.

Let’s say, for example, you’ve got an item table that has all of your items in it, and there are already columns in there. One of the first things you’re going to have to do is figure out what the schema is behind that item table and get it created. Once you get it created, you have to write the API to populate that table. Then you have to schedule that API on whatever schedule you want to be able to copy data from source to destination. All that’s really realistic, but let’s say just this one item table would probably take five days of work.

Using Precog, we took that five days and did it in five hours.

All we do is click the objects that we want to replicate. Precog does all the rest. All we have to do is ensure that Infor is set up for replication, check a box, publish, and go — we’ve got data synchronization. Now let’s say I’ve got 77 objects out there, and I would have to create a database table for each one with all the fields in it. And I’m sure there are tools out there to simplify it, but the short of it is that you still have to go out there, create it, write APIs, synchronize, test it, validate it, you know, and then also do the Change Data Capture, which is a real key one. All that’s already built into the tool. So we don’t have to worry about writing any of that. Not having to worry about Change Data Capture was a moment of relief for me.

I’m a continuous improvement guy, so my perspective is, “If I can give you more time back in your day to do your job than before,”… that’s a win.

.svg)