November 20, 2025

ETL? ELT? Just give me my data!

Precog

Discover the key differences between ELT vs ETL and why businesses are moving to ELT for faster, more scalable data.

Sometimes the jargon gets in the way of the “thing” — in this case, we know all you want is your data. Here’s a quick refresher in case you need it. Remember, Precog is an “enterprise ELT platform” — in plain English: we’re the ones who get your data out of your applications and into a format/and in a place where you can get to work.

What is ELT and how is it different from ETL?

ELT (Extract, Load, Transform) and ETL (Extract, Transform, Load) are both data integration processes, but they differ in the order of operations.

In ETL, data is extracted from a source, transformed on a separate server, and then loaded into a data warehouse. In ELT, data is extracted and immediately loaded into a powerful data warehouse. The transformation step happens after the data is in the warehouse, using the warehouse’s own computing power. This is the key difference and why ELT is faster for loading large volumes of data.

Why is ELT the modern approach?

ELT is considered modern because it takes advantage of the scalability and power of cloud data warehouses like Snowflake, BigQuery, Redshift, SAP Datasphere, Databricks, Aurora and more. These platforms can handle massive amounts of data and perform complex transformations much faster and more cost-effectively than traditional, standalone transformation servers used in ETL. It also allows you to keep a copy of the raw data in the warehouse, giving you more flexibility for future analysis without having to re-extract the data from the source.

What are the key components of an ELT pipeline?

An ELT pipeline has three main components:

- Sources: The original systems where data is generated (e.g., a CRM, a database, a web application).

- Ingestion Tool: This is the software that handles the Extract and Load steps. It connects to the sources and loads the raw data into the data warehouse. Precog is one of these tools.

- Data Warehouse: The central repository for all the data. It’s where the raw data lives and where the Transform step takes place.

What is a “data warehouse” and a “data lake,” and what is their role in ELT?

- A data warehouse is a structured repository for clean, transformed data. It’s optimized for fast querying and business intelligence. In ELT, it’s both the destination for raw data and the engine where data is transformed into a usable format.

- A data lake is a storage repository for all types of data—structured, semi-structured, and unstructured—in its raw format. It’s often used when you need to store vast amounts of raw data for future or exploratory analysis. In some ELT architectures, the data lake is the initial loading destination before a more structured data warehouse.

What does “transformation” really mean?

Transformation is the process of cleaning, restructuring, and enriching raw data to make it useful for analysis. It’s where you apply your business logic. Examples include:

- Cleaning: Fixing typos, handling missing values, or standardizing formats.

- Enriching: Joining data from different tables (e.g., connecting customer data with their orders).

- Aggregating: Calculating key metrics like total sales per day or average customer lifetime value.

Where does the “business logic” live in an ELT process?

Business logic lives in the transformation step of the ELT process. This is where you define the rules for how raw data becomes a useful business metric. Tools like dbt (data build tool) are very popular for managing these transformations. They allow you to write transformation logic in SQL, which is then run directly within the data warehouse.

How does ELT impact data quality?

ELT processes can actually improve data quality. By loading raw data first, you have a historical record of the original data. Transformations can then be built on top of this raw data to fix inconsistencies, validate data types, and ensure data integrity. If a transformation fails, the raw data remains untouched, making it easier to debug and fix.

How does the ELT process handle changing source data?

Most modern ingestion tools handle schema changes automatically. If a new column is added to the source, the tool will detect it and add it to the corresponding table in the data warehouse. If a column is removed or a data type changes, the tools usually offer settings to either ignore the change, warn you, or stop the sync, depending on the severity. This automation significantly reduces the maintenance burden.

What is the difference between a “full load” and an “incremental load”?

- A full load (or full refresh) extracts and loads all the data from a source system every time the pipeline runs. This is simple but can be very time-consuming and expensive for large datasets.

- An incremental load only extracts and loads the data that has changed since the last time the pipeline ran. This is much more efficient and is the standard practice for large or frequently updated data sources. It relies on a “bookmark,” such as a timestamp or an auto-incrementing ID, to track what’s new.

How do I know if the ELT pipeline is running correctly?

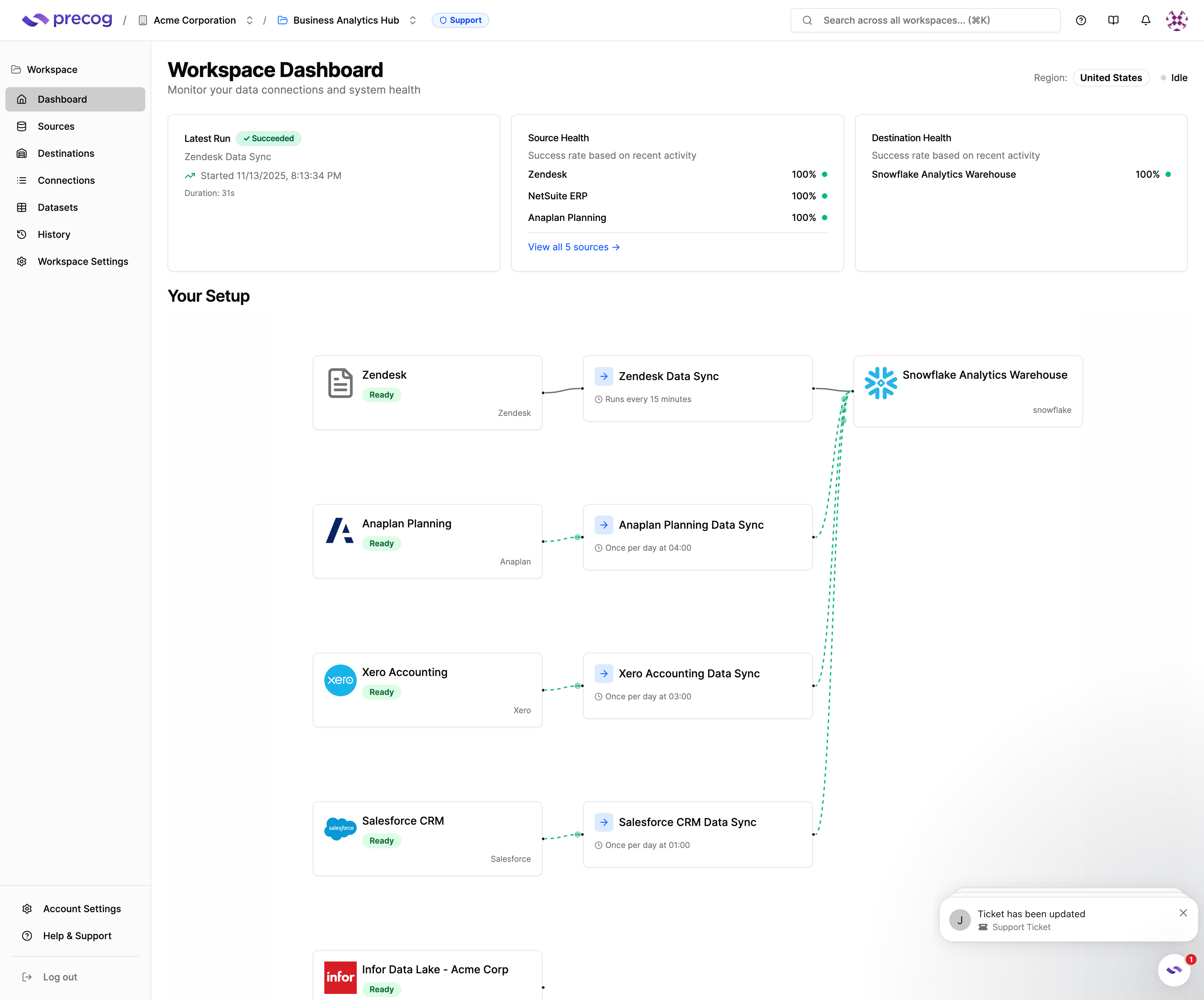

Most ELT tools come with a monitoring dashboard. You can log in and see the status of each pipeline, including when it last ran, if it was successful or failed, and how many rows were loaded. You can also set up alerts to get notified via email or a messaging platform like Slack if a pipeline fails.

What happens when the pipeline fails?

When an ELT pipeline fails, the ingestion tool typically sends a notification. You would then check the pipeline to see the error message. Common causes include:

- Source system changes (e.g., a table was renamed).

- API connection issues.

- Insufficient permissions.

The first step is to identify the root cause from the logs and then contact the appropriate team (e.g., the data engineering team) with the specific error information.

How long does it take for data to be available for my reports?

This is known as data latency. The time it takes depends on two factors:

- Ingestion schedule: How often the ingestion tool runs (e.g., every 15 minutes, hourly, or daily).

- Transformation schedule: How often the transformation models are run after the data is loaded.

The total time is the sum of these two schedules. For many businesses, an hourly or daily cadence is sufficient, providing fresh data within a predictable timeframe.

Can I get access to the raw data?

Yes, a key benefit of the ELT approach is that the raw, untransformed data is readily available in the data warehouse. Unlike traditional ETL where the raw data might be discarded, ELT preserves it. This allows you to run ad-hoc queries, test new business logic, or re-engineer transformations without needing to go back to the source system.

How do we ensure data privacy and security in the ELT process?

Data privacy and security are managed at several points:

- Ingestion: Most tools use secure, encrypted connections to extract data.

- Data Warehouse: Access to the data warehouse is controlled by roles and permissions. Only authorized people can view or query the data.

- Transformation: Personal identifiable information (PII) can be masked, hashed, or anonymized during the transformation step to protect sensitive data before it reaches the final, user-facing tables.

.svg)