December 4, 2025

The Five Pitfalls of Legacy ELT in the AI Era

Jon Finegold

Legacy tools were built to move rows and columns. You need to power intelligence.

As enterprises rush to unlock value from AI, they are discovering a hard truth: existing tools and processes are holding them back. AI models require not only access to data locked in dozens of mission-critical applications but also context, relationships and semantic understanding of that data.

AI is ready. Your data is not. Models are powerful enough to deliver results, but only if they can access the data and understand it. If you are feeding modern AI with legacy pipelines, you are starving your models of the context needed to deliver results.

The Five Pitfalls of Legacy ELT

1. Limited Application Coverage Prohibits Full Insights: AI initiatives depend on connecting dozens of niche, vertical-specific systems, not just a few popular applications. Legacy ELT vendors offer a fixed menu of connectors. If your app isn't on their list, you wait months for them to build it, or you waste engineering cycles building it yourself. By the time the data arrives, the business requirements have changed.

2. Chokes on Complex Data: Legacy pipelines struggle with the reality of complex SaaS data. They often miss custom and calculated fields and fail to handle large, multi-dimensional objects. This results in a "partial truth" as only part of the data is delivered. Without the full picture, the models fall short. If the Chief Revenue Officer is looking for a customer 360 view, you can’t deliver a 180.

3. Lacks Semantic Models: Older tools focus on delivering payloads of rows and columns. When the data lands, it takes massive manual efforts to make sense of it. Humans can’t easily work with data without context and neither can LLMs. Without semantic understanding or entity modeling, you are left with unintelligible tables. This lack of context leads directly to model hallucinations and poor performance.

4. Fragile Pipelines that Can’t Self-Heal: Modern SaaS APIs evolve constantly. A renamed field, a new pagination rule, or an auth change is often enough to shatter a legacy pipeline. Because these tools lack automated schema evolution, reliability suffers while your team spends valuable time patching broken connections manually.

5. Cost-Prohibitive at Scale: What looks cheap up front becomes expensive to operate. The build-it-yourself model seems appealing until pipelines don’t scale and require ongoing maintenance. In addition, row-based pricing penalizes growth and adds unpredictability to your budget forecasts. As you scale, these costs become unsustainable.

The Precog Advantage

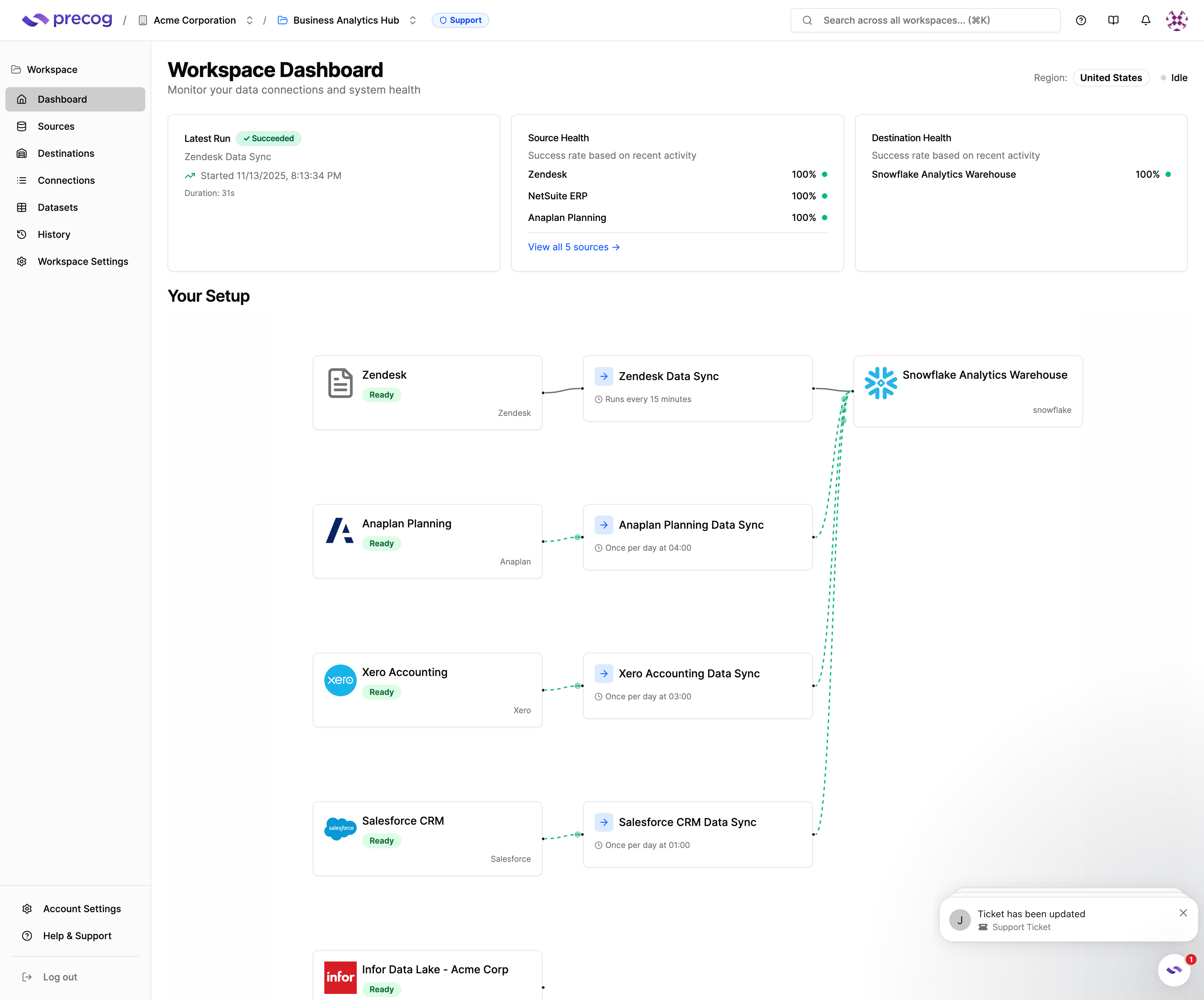

Precog was designed from the ground up for the AI era. We automate the delivery of AI-ready data from any SaaS application into Snowflake, Databricks, and other modern data platforms.

The Six Pillars of Precog

- AI-driven Connector Generation: Ingest complex, evolving SaaS APIs with no coding required. New connectors can be added in hours, with more than 2,000 pre-configured.

- Automated Schema Evolution: Adapt to source changes without pipeline rebuilds.

- Full-Fidelity Data Capture: Preserve all fields, including custom and calculated fields and long-tail data that drive accuracy.

- Semantic Modeling: Transform raw payloads into business entities that AI (and humans) can actually understand.

- Enterprise-Grade: Manage all your application pipelines from a unified interface with enterprise class visibility and control.

- Operate at Scale: Precog was designed for scale and can handle even the largest data payloads, all with simple and predictable pricing that aligns with the business value derived.

.svg)